Summary from Research

In conclusion, when we are concerned with the performance of the model under different/specific IoU, i.e., when bounding box accuracy is our concern, then we use mAP.

However, some model is well-trained for annotating target object already, but it fails to spot diversified target that cause missing detection, then IoU is not our concern, we can simply use F1- score.

F1-Score

Define

then we define F1-score to be

I refer to [ZL] for more detail on F1-score and how it behaves compared to arithmetic mean and geometric mean. In short summary, penalizes unbalanced and .

mAP

Precision and Recall

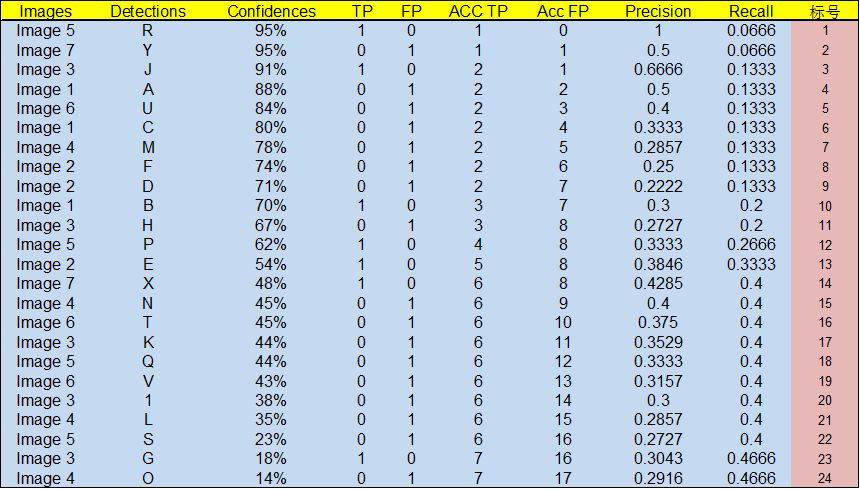

Given that we have fixed a label to work with, say , we need the following table with

Note that the denominator is actually the total number of ground truths from all images, not just from a single image.

By sorting the results using objectiveness/confidence score, we get a table like:

Why Recall

Precision (where is the number of boxes identified as in correctly) is not enough as it doesn't count undetected signboard.

Calculation of mAP from the Graph

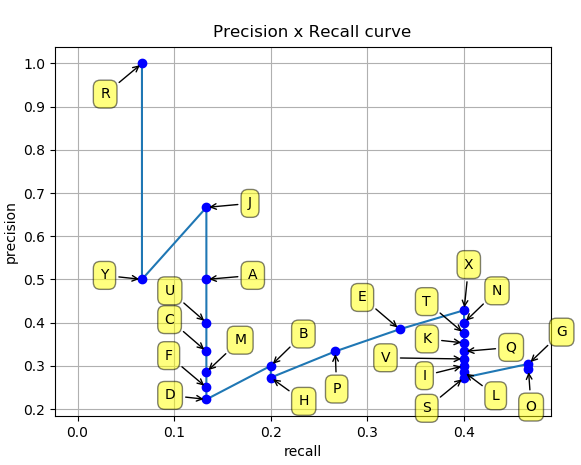

By using the precision and recall columns we should be able to graph it as follows:

Note that the area under the path now means exactly the average precision (that's why recall is a fraction, it helps normalize the number of calls).

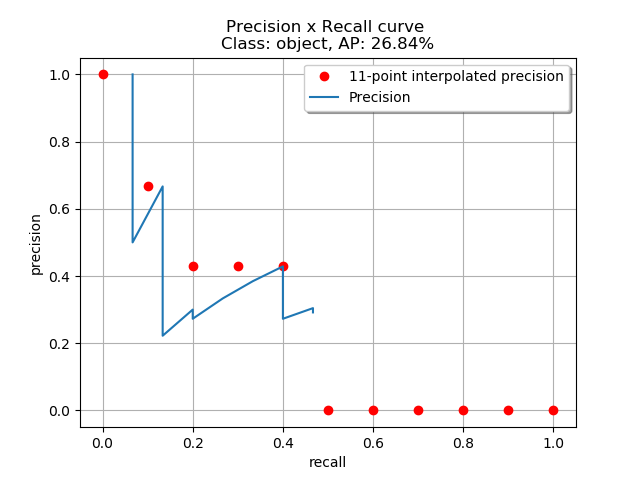

Programmatically we don't calculate that area directly, we smooth the graph out for approximated average precision in the following way:

The new dots is exactly the same as the value , where is the recall, our approximated precision is

which is, for example,

in the figure above.

References

- [ZL] Zeya LT, Essential Things You Need to Know About F1-Score