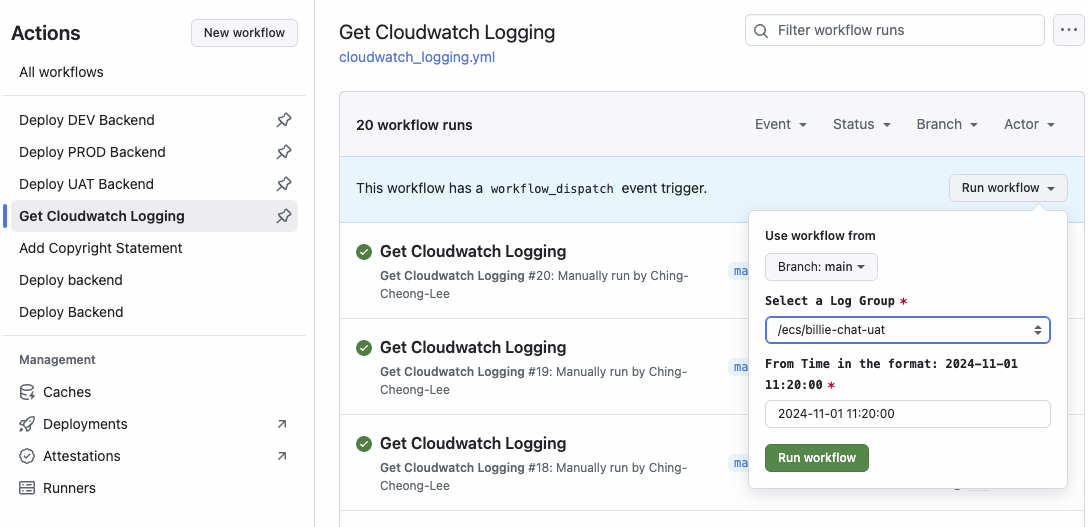

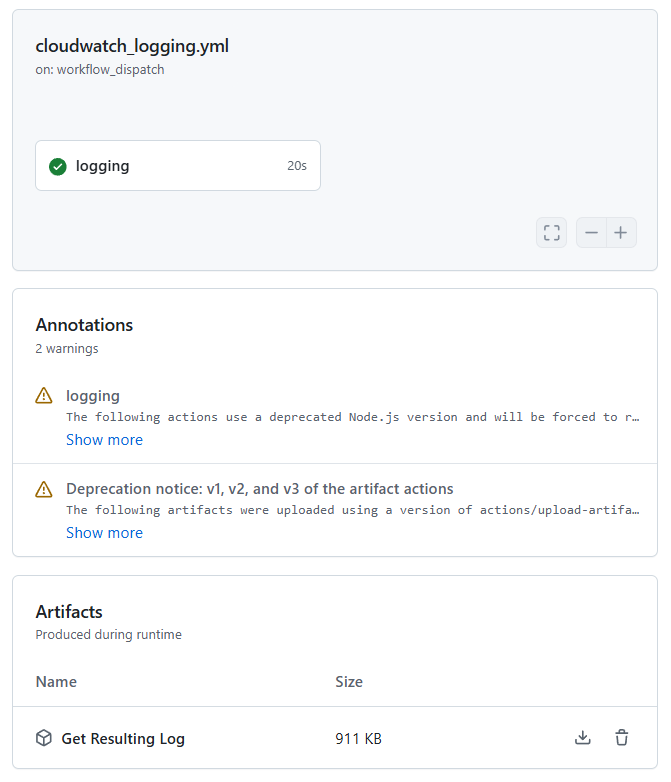

Results

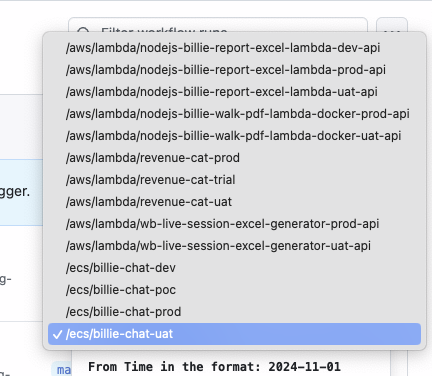

Selection List in Workflow Dispatch

Download the Artifact

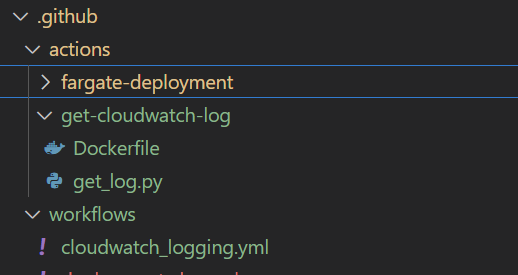

The Project Structure

Unlike javascript action, this time we don't implement an action.yml to organize the input variables:

For an example of javascript action, reader may refer to Github Action for Deployment on ECS Fargate) where you can see that action.yml is used to interact with the with part of each step in a job.

.github/actions/get-cloudwatch-log/Dockerfile

FROM realsalmon/alpinelinux-python-boto3 COPY get_log.py /get_log.py CMD ["python", "/get_log.py"]

.github/actions/get-cloudwatch-log/get_log.py

1import boto3 2import os 3from datetime import datetime, timezone, timedelta 4 5def text_transform(text): 6 try: 7 return text.encode().decode("unicode_escape").replace("\t", " ") 8 except Exception as e: 9 print(f"{e}") 10 11 12def timestamp_transform(timestamp_ms): 13 return datetime.fromtimestamp(timestamp_ms/1000).strftime("%Y-%m-%d %H:%M:%S") 14 15 16def convert_to_utc8(timestamp_ms): 17 dt = datetime.fromtimestamp(timestamp_ms/1000, tz=timezone.utc) 18 tz_utc8 = timezone(timedelta(hours=8)) 19 dt_utc8 = dt.astimezone(tz_utc8) 20 return dt_utc8.strftime("%Y-%m-%d %H:%M:%S")

-

By default the timestamp transformed from

boto3's millisecond viadatetimepackage in python will be converted to the timezone that the github action worker machine lies in. -

To convert back to

UTC+8we need to manually adjust the timezone, otherwise we get a time 8 hours before.

21def getter(obj, key, default_value): 22 value = None 23 try: 24 v = obj[key] 25 if v is not None: 26 value = v 27 else: 28 raise Exception("cannot be None") 29 except Exception as e: 30 value = default_value 31 return value 32 33def main(): 34 LOG_GROUP = os.environ["LOG_GROUP"] 35 FROM_TIMESTAMP = os.environ["START_FROM"].strip() 36 END_TIMESTAMP = None 37 REGION_NAME = "ap-southeast-2" 38 N = 10 39 40 client = boto3.client('logs', region_name=REGION_NAME) 41 42 # Determine if I should 43 should_end = False 44 if LOG_GROUP is None: 45 print("Argument: --log_group= \tFor example: //ecs/billie-chat-prod, ('/' will be resolved into local file system in git-bash)") 46 should_end = True 47 else: 48 LOG_GROUP = LOG_GROUP.replace("//", "/") 49 50 if FROM_TIMESTAMP is None: 51 print("Argument: --start= \texample: \"2024-05-09 12:00:00\" in your local time") 52 print("Argument: --end= \tis optional and the default is set to be current") 53 should_end = True 54 else: 55 dt = datetime.strptime(FROM_TIMESTAMP, '%Y-%m-%d %H:%M:%S') 56 tz = timezone(timedelta(hours=8)) 57 dt = dt.replace(tzinfo=tz) 58 FROM_TIMESTAMP = int(dt.timestamp()*1000) 59 END_TIMESTAMP = int(datetime.strptime(END_TIMESTAMP, '%Y-%m-%d %H:%M:%S').timestamp()*1000) \ 60 if END_TIMESTAMP is not None \ 61 else int(datetime.now().timestamp()*1000) 62 if should_end is True: 63 return 64 65 print("LOG_GROUP\t", LOG_GROUP) 66 print("TIMESTAMP\t", FROM_TIMESTAMP, ">>", END_TIMESTAMP) 67 68 FILE_DIR = "/github/workspace"

-

The highlighted directory inside of the container will be bind-mounted to the working directory of the github action.

-

In other words, a file saved at

/github/workspace/haha.txtinside of the container will be available to the remaining step of the job outside of the container (at the root project level).

70 if not os.path.exists(FILE_DIR): 71 os.makedirs(FILE_DIR) 72 73 log_group_name = LOG_GROUP 74 75 log_streams = client.describe_log_streams( 76 logGroupName=log_group_name, 77 orderBy="LastEventTime", 78 descending=True 79 ) 80 81 log_stream_names = [desc["logStreamName"] 82 for desc in log_streams["logStreams"]][0: N][::-1] 83 i = 0 84 85 SAVE_DESTINIATION = f"{FILE_DIR}/result.log" 86 87 if os.path.exists(SAVE_DESTINIATION): 88 os.unlink(SAVE_DESTINIATION) 89 90 with open(SAVE_DESTINIATION, "a+", encoding="utf8") as file: 91 for log_stream_name in log_stream_names: 92 i += 1 93 print() 94 print(f"Downloading [{i}-th stream: {log_stream_name}] ...") 95 response = {"nextForwardToken": None} 96 started = False 97 page = 0 98 n_lines = 0 99 while started is False or response['nextForwardToken'] is not None: 100 started = True 101 page += 1 102 103 karg = {} if response['nextForwardToken'] is None else { 104 "nextToken": response['nextForwardToken']} 105 response = client.get_log_events( 106 startTime=FROM_TIMESTAMP, 107 endTime=END_TIMESTAMP, 108 logGroupName=log_group_name, 109 logStreamName=log_stream_name, 110 startFromHead=True, 111 **karg 112 ) 113 data = response["events"] 114 data = sorted(data, key=lambda datum: datum["timestamp"]) 115 data_ = [{"timestamp": convert_to_utc8( 116 datum["timestamp"]), "message": text_transform(datum["message"])} for datum in data] 117 if len(data_) == 0: 118 if page > 1: 119 print() 120 print("No more data for the current stream") 121 break 122 n_lines += len(data_) 123 print( 124 f"Loading Page {page}, accumulated: {n_lines} lines ", end="\r") 125 for datum in data_: 126 print("datum", datum) 127 line = getter(datum, "timestamp", "") + " |" + \ 128 "\t" + getter(datum, "message", "") + "\n" 129 file.write(line) 130 131 132 133if __name__ == "__main__": 134 main()

.github/workflows/cloudwatch_logging.yml with Retention Period

name: 'Get Cloudwatch Logging' on: workflow_dispatch: inputs: log_group: description: 'Select a Log Group' required: true default: '/ecs/billie-chat-dev' type: choice options: - /aws/lambda/nodejs-billie-report-excel-lambda-dev-api - /aws/lambda/nodejs-billie-report-excel-lambda-prod-api - /aws/lambda/nodejs-billie-report-excel-lambda-uat-api - /aws/lambda/nodejs-billie-walk-pdf-lambda-docker-prod-api - /aws/lambda/nodejs-billie-walk-pdf-lambda-docker-uat-api - /aws/lambda/revenue-cat-prod - /aws/lambda/revenue-cat-trial - /aws/lambda/revenue-cat-uat - /aws/lambda/wb-live-session-excel-generator-prod-api - /aws/lambda/wb-live-session-excel-generator-uat-api - /ecs/billie-chat-dev - /ecs/billie-chat-poc - /ecs/billie-chat-prod - /ecs/billie-chat-uat start_from: type: string description: "From Time in the format: 2024-11-01 11:20:00" required: true jobs: logging: runs-on: ubuntu-latest environment: deployment env: AWS_ACCESS_KEY_ID: ${{ secrets.AWS_ACCESS_KEY_ID }} AWS_SECRET_ACCESS_KEY: ${{ secrets.AWS_SECRET_ACCESS_KEY }} steps: - name: Get Code uses: actions/checkout@v4 with: ref: main fetch-depth: 1 - name: Run Docker Action run: | docker build -t get-cloudwatch-log .github/actions/get-cloudwatch-log docker run --user root \ -v "${{ github.workspace }}":/github/workspace:rw \ -e AWS_ACCESS_KEY_ID="${{ env.AWS_ACCESS_KEY_ID }}" \ -e AWS_SECRET_ACCESS_KEY="${{ env.AWS_SECRET_ACCESS_KEY }}" \ -e LOG_GROUP="${{ github.event.inputs.log_group }}" \ -e START_FROM="${{ github.event.inputs.start_from }}" \ get-cloudwatch-log - name: Upload Artifact uses: actions/upload-artifact@v3 with: name: Get Resulting Log path: result.log retention-days: 5