1. Result

1. Result

2. Dependencies via pyproject.toml

2. Dependencies via

pyproject.tomlWe first uv init to generate pyproject.toml and .python-version separately.

Next inside .python-version we write 3.12.8 and in pyproject.toml we fill in the depenendencies.

Remark. I didn't minimize this bulky list as I learned it from some other RAG course, but uv is already insanely fast so it doesn't quite matter.

1[project] 2name = "app" 3version = "0.1.0" 4description = "Add your description here" 5readme = "README.md" 6requires-python = "==3.12.8" 7dependencies = [ 8 "anthropic>=0.69.0", 9 "beautifulsoup4>=4.14.2", 10 "chromadb>=1.1.0", 11 "datasets==3.6.0", 12 "feedparser>=6.0.12", 13 "google-genai>=1.41.0", 14 "google-generativeai>=0.8.5", 15 "gradio>=5.47.2", 16 "ipykernel>=6.30.1", 17 "ipywidgets>=8.1.7", 18 "jupyter-dash>=0.4.2", 19 "langchain>=0.3.27", 20 "langchain-chroma>=0.2.6", 21 "langchain-community>=0.3.30", 22 "langchain-core>=0.3.76", 23 "langchain-openai>=0.3.33", 24 "langchain-text-splitters>=0.3.11", 25 "litellm>=1.77.5", 26 "matplotlib>=3.10.6", 27 "nbformat>=5.10.4", 28 "modal>=1.1.4", 29 "numpy>=2.3.3", 30 "ollama>=0.6.0", 31 "openai>=1.109.1", 32 "pandas>=2.3.3", 33 "plotly>=6.3.0", 34 "protobuf==3.20.2", 35 "psutil>=7.1.0", 36 "pydub>=0.25.1", 37 "python-dotenv>=1.1.1", 38 "requests>=2.32.5", 39 "scikit-learn>=1.7.2", 40 "scipy>=1.16.2", 41 "sentence-transformers>=5.1.1", 42 "setuptools>=80.9.0", 43 "speedtest-cli>=2.1.3", 44 "tiktoken>=0.11.0", 45 "torch>=2.8.0", 46 "tqdm>=4.67.1", 47 "transformers>=4.56.2", 48 "wandb>=0.22.1", 49 "langchain-huggingface>=1.0.0", 50 "langchain-ollama>=1.0.0", 51 "langchain-anthropic>=1.0.1", 52 "langchain-experimental>=0.0.42", 53 "groq>=0.33.0", 54 "xgboost>=3.1.1", 55 "python-frontmatter>=1.1.0", 56]

3. RAG Implementation

3. RAG Implementation

3.1. Import

3.1. Import

1import os 2import glob 3import tiktoken 4import numpy as np 5from dotenv import load_dotenv 6from langchain_core.messages import SystemMessage, HumanMessage 7from langchain_openai import AzureChatOpenAI 8from langchain_openai import OpenAIEmbeddings 9from langchain_chroma import Chroma 10from langchain_huggingface import HuggingFaceEmbeddings 11from langchain_community.document_loaders import DirectoryLoader, TextLoader 12from langchain_text_splitters import RecursiveCharacterTextSplitter 13from sklearn.manifold import TSNE 14import plotly.graph_objects as go 15 16MODEL = os.getenv("AZURE_OPENAI_MODEL") 17DB_NAME = "vector_db" 18load_dotenv(override=True)

3.2. Define AzureOpenAI Object

3.2. Define AzureOpenAI Object

1from openai import AzureOpenAI 2 3client = AzureOpenAI( 4 api_key=os.getenv("AZURE_OPENAI_API_KEY"), 5 api_version="2025-01-01-preview", 6 azure_endpoint="https://shellscriptmanager.openai.azure.com" 7)

3.3. Get all md Files and Tokenize It

3.3. Get all md Files and Tokenize It

According to this folder structure:

1knowledge_base_path = "../src/mds/articles/**/*.md" 2files = glob.glob(knowledge_base_path, recursive=True) 3print(f"Found {len(files)} files in the knowledge base") 4 5entire_knowledge_base = "" 6 7for file_path in files: 8 with open(file_path, 'r', encoding='utf-8') as f: 9 entire_knowledge_base += f.read() 10 entire_knowledge_base += "\n\n" 11 12 13encoding = tiktoken.encoding_for_model(MODEL) 14tokens = encoding.encode(entire_knowledge_base) 15token_count = len(tokens) 16print(f"Total tokens for {MODEL}: {token_count:,}")

1Found 411 files in the knowledge base 2Total tokens for gpt-4.1-mini: 722,623 3Total tokens for gpt-4.1-mini: 722,623

3.4. Get metadata from md Files and Create Document list

3.4. Get metadata from md Files and Create Document list

1import frontmatter 2from langchain_core.documents import Document 3 4 5def load_md_with_frontmatter(filepath): 6 import frontmatter 7 from langchain_core.documents import Document 8 9 try: 10 post = frontmatter.load(filepath) 11 except Exception as e: 12 print(f"Error loading {filepath}: {e}") 13 raise 14 15 tags = post.get("tag", "") 16 if isinstance(tags, list): 17 tags = ",".join(sorted(tags)) 18 elif isinstance(tags, str) and "," in tags: 19 tags = ",".join(sorted([t.strip() for t in tags.split(",")])) 20 21 return Document( 22 page_content=post.content, 23 metadata={ 24 "title": post.get("title", ""), 25 "date": str(post.get("date", "")), 26 "tags": tags, # Now a string: "db-backup,postgresql,sql" 27 "id": post.get("id", ""), 28 "source": filepath 29 } 30 ) 31 32 33# Load all your markdown files 34documents = [] 35for filepath in glob.glob(knowledge_base_path, recursive=True): 36 try: 37 documents.append(load_md_with_frontmatter(filepath)) 38 except Exception as e: 39 print(f"Skipping {filepath} due to error: {e}")

3.5. Split the Texts with Overlap

3.5. Split the Texts with Overlap

1text_splitter = RecursiveCharacterTextSplitter( 2 chunk_size=1000, chunk_overlap=200) 3chunks = text_splitter.split_documents(documents) 4 5print(f"Divided into {len(chunks)} chunks")

1Divided into 3667 chunks

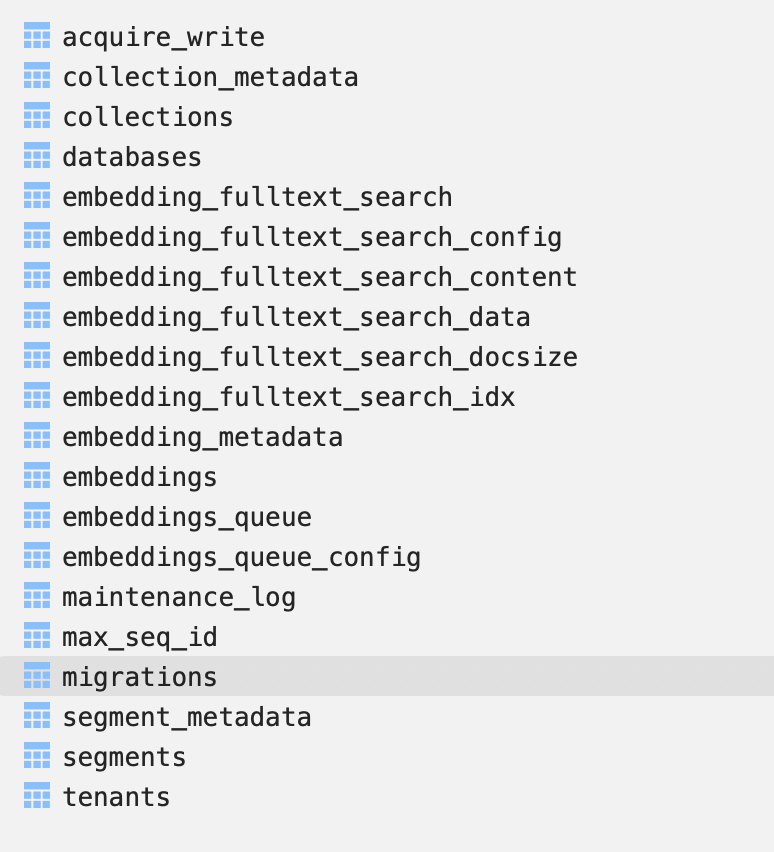

3.6. Embed Documents into Vector DB Chroma

3.6. Embed Documents into Vector DB Chroma

1db_name = "vector_db" 2 3embeddings = HuggingFaceEmbeddings(model_name="all-MiniLM-L6-v2") 4# embeddings = OpenAIEmbeddings(model="text-embedding-3-large") 5 6if os.path.exists(db_name): 7 Chroma(persist_directory=db_name, 8 embedding_function=embeddings).delete_collection() 9 10vectorstore = Chroma.from_documents( 11 documents=chunks, embedding=embeddings, persist_directory=db_name) 12print(f"Vectorstore created with {vectorstore._collection.count()} documents")

1Vectorstore created with 3667 documents

3.7. Create RAG Retriever and Agent

3.7. Create RAG Retriever and Agent

1retriever = vectorstore.as_retriever() 2llm = AzureChatOpenAI( 3 temperature=0, 4 azure_deployment=os.getenv("AZURE_OPENAI_MODEL"), # Your deployment name 5 azure_endpoint="https://shellscriptmanager.openai.azure.com", 6 api_key=os.getenv("AZURE_OPENAI_API_KEY"), 7 api_version="2025-01-01-preview" 8)

3.8. Define a Chat Agent Without any Checkpointer and history

3.8. Define a Chat Agent Without any Checkpointer and history

1SYSTEM_PROMPT_TEMPLATE = """ 2You are a knowledgeable, friendly assistant representing the blog author James Lee. 3You are chatting with a user about articles in the blog. 4If relevant, use the given context to answer any question. 5If you don't know the answer, say so. 6Please list all the related articles and a very brief summary in no more than 30 words in bullet points. 7Context: 8{context} 9""" 10 11def format_docs_with_metadata(docs): 12 formatted = [] 13 for i, doc in enumerate(docs, 1): 14 title = doc.metadata.get("title", "Unknown") 15 source = doc.metadata.get("source", "Unknown") 16 date = doc.metadata.get("date", "") 17 18 formatted.append( 19 f"[Document {i}]\n" 20 f"Title: {title}\n" 21 f"Source: {source}\n" 22 f"Date: {date}\n" 23 f"Content:\n{doc.page_content}" 24 ) 25 return "\n\n===\n\n".join(formatted) 26 27def answer_question(question: str, history): 28 docs = retriever.invoke(question) 29 context = format_docs_with_metadata(docs) 30 print(context) 31 system_prompt = SYSTEM_PROMPT_TEMPLATE.format(context=context) 32 response = llm.invoke( 33 [SystemMessage(content=system_prompt), HumanMessage(content=question)]) 34 return response.content

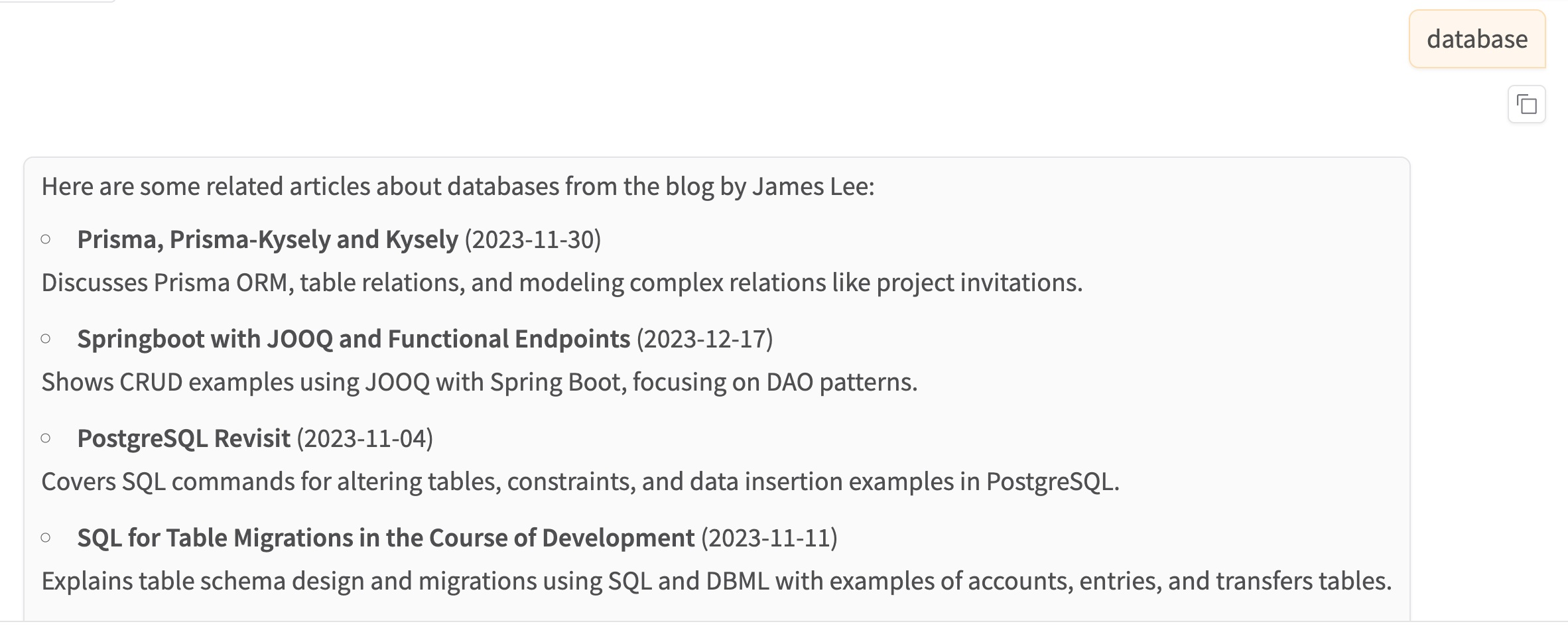

3.9. Run a Chat UI via gradio

3.9. Run a Chat UI via gradio

1gr.ChatInterface(answer_question).launch()

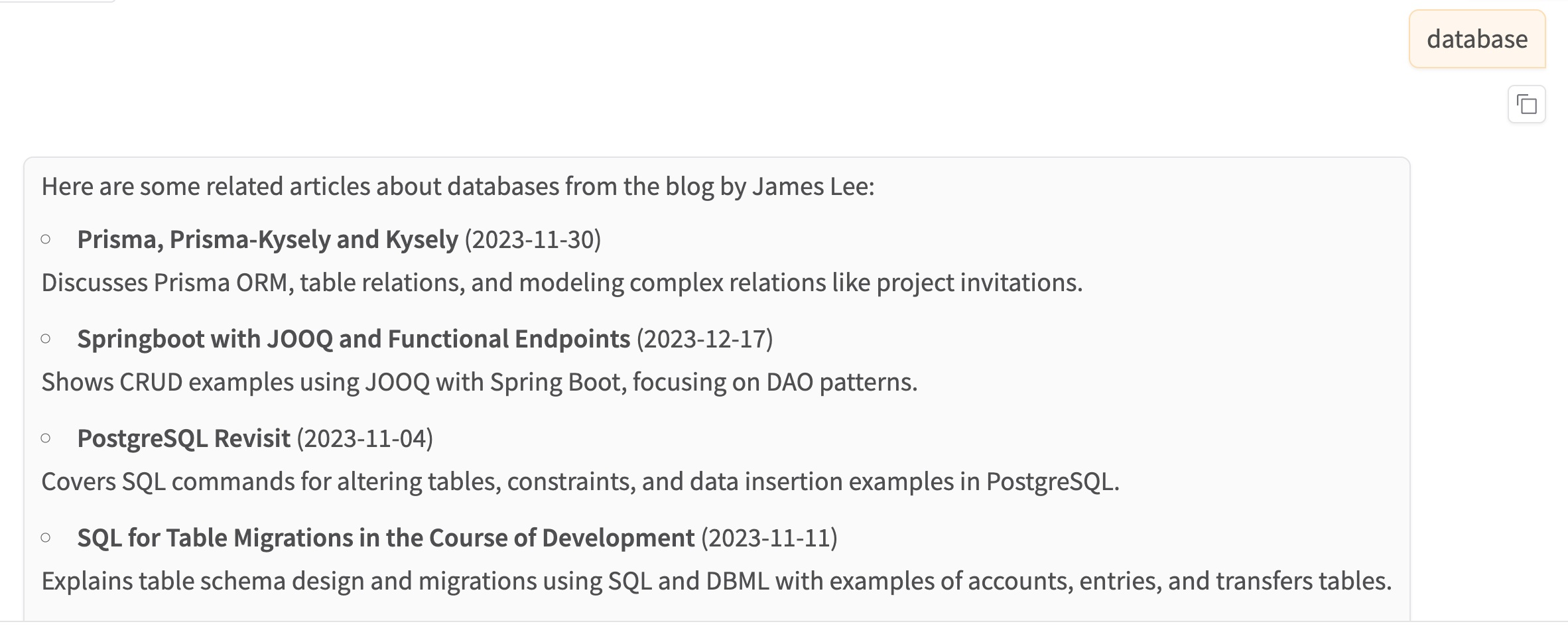

Which results in the chatbot that we displayed at the beginning: